Building AI Agents: Less is Often More

Anthropic recently published a fascinating deep dive into building effective AI agents, and it really resonated with my experience in the field. As someone who works with these technologies daily, I wanted to share my thoughts and break down why their approach makes so much sense.

The Key Insight: Simplicity Wins

The most striking aspect of Anthropic's findings is that the most successful implementations weren't using complex frameworks or specialized libraries. This aligns perfectly with what I've seen in practice - often, the simplest solution is the most robust. While there are many frameworks available (LangGraph, Amazon Bedrock's AI Agent framework, etc.), adding these layers of abstraction can sometimes create more problems than they solve.

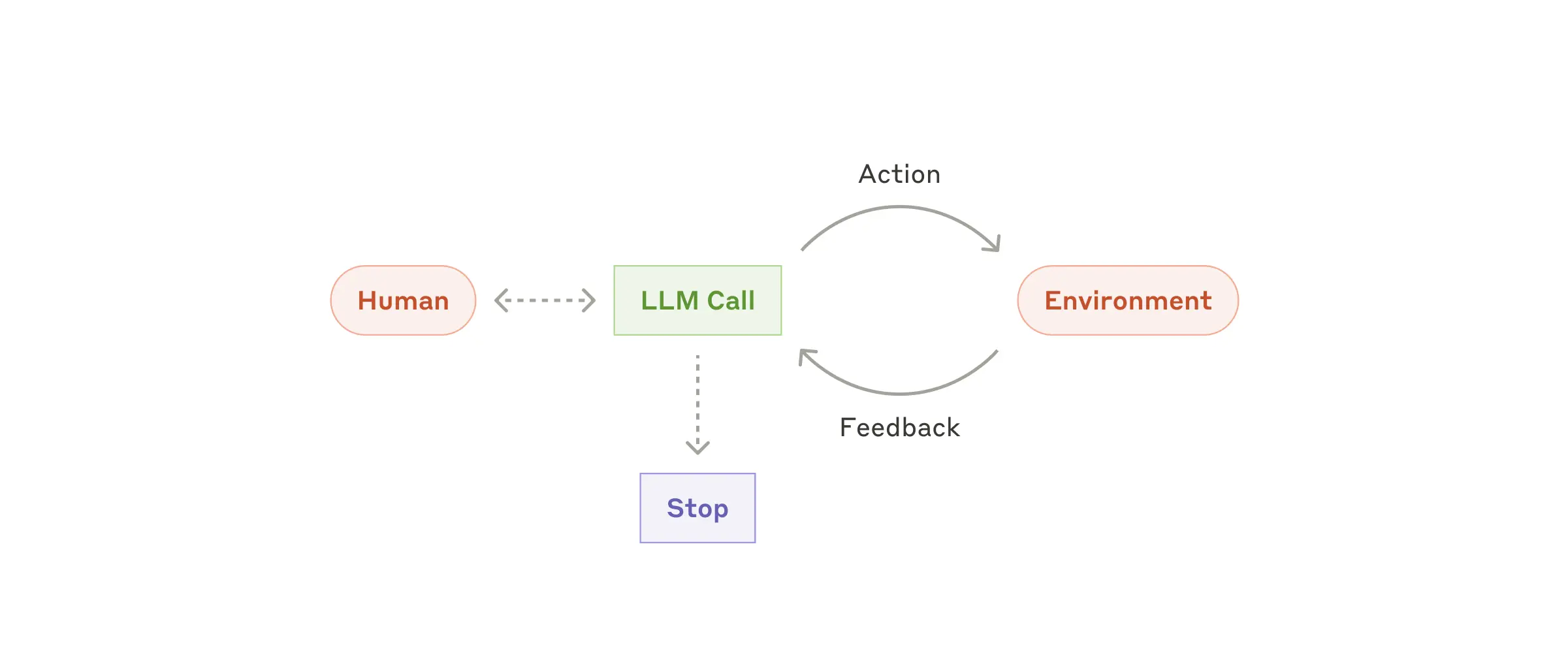

Understanding Workflows vs Agents

One crucial distinction they make is between workflows and agents:

- Workflows follow predefined paths

- Agents dynamically direct their own processes

In my experience, many tasks that people think need a complex agent can actually be solved with a simple workflow. I've found that starting with the simplest possible solution and only adding complexity when necessary saves both development time and reduces potential points of failure.

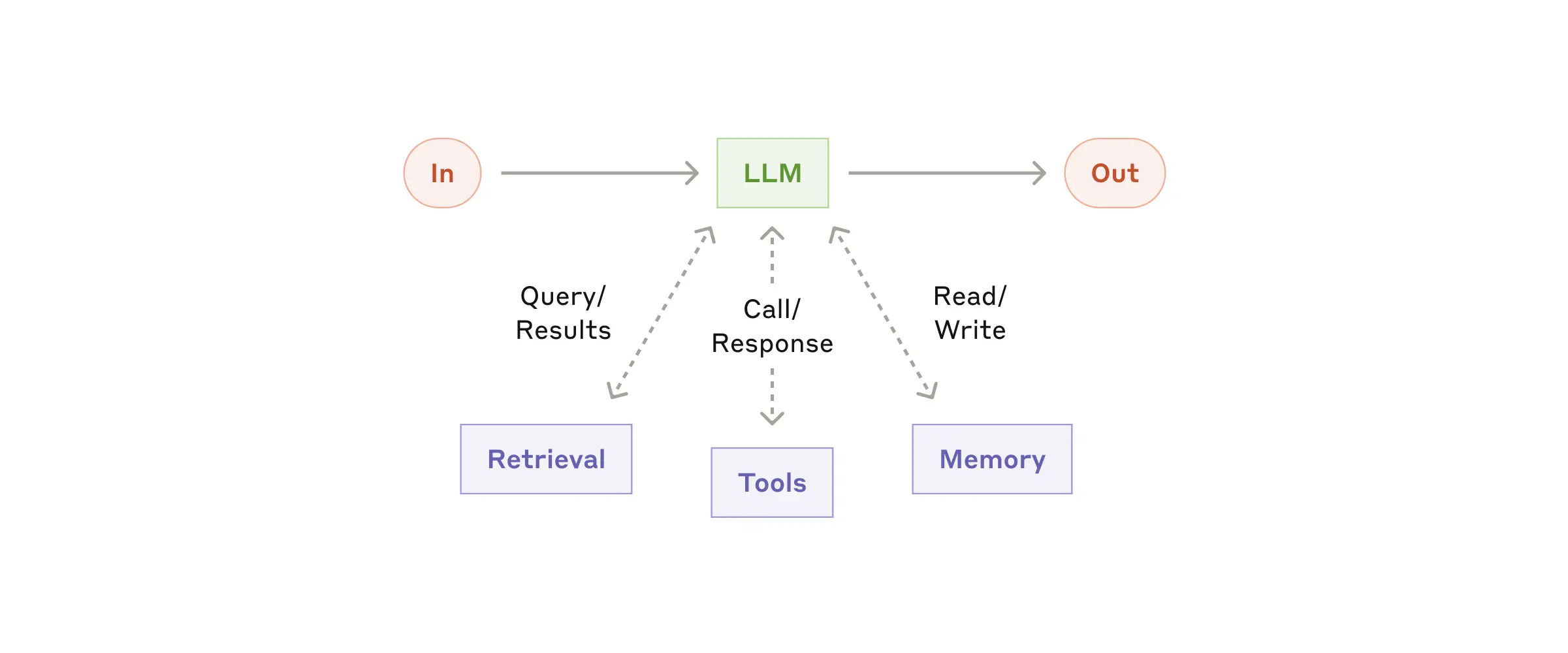

The Building Blocks Approach

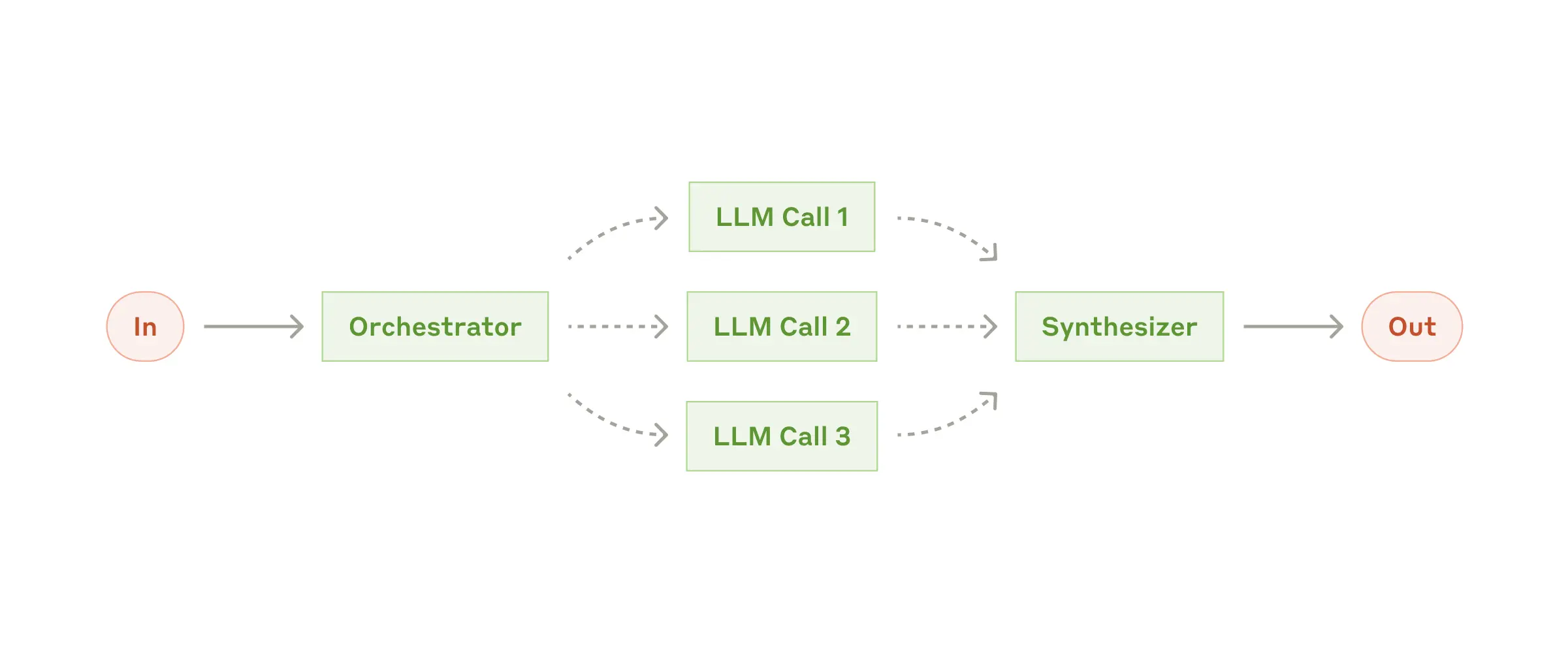

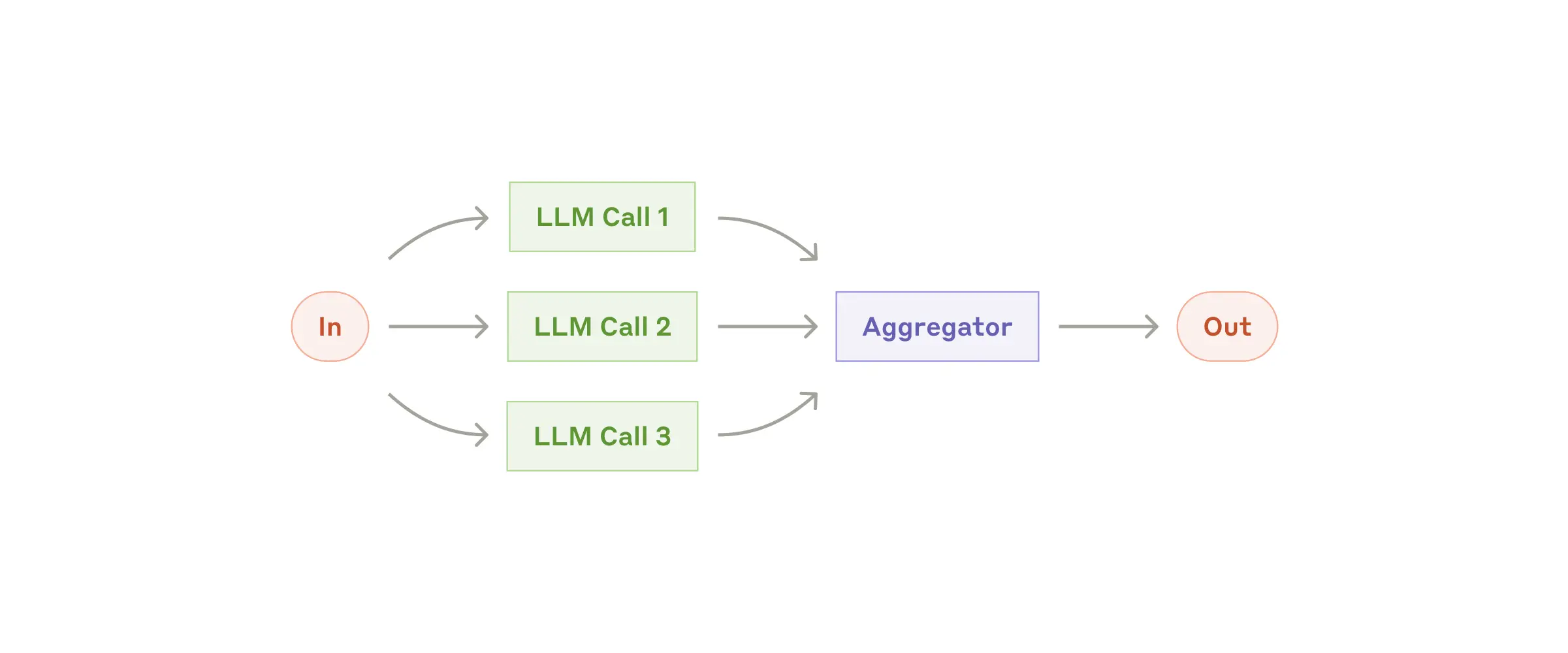

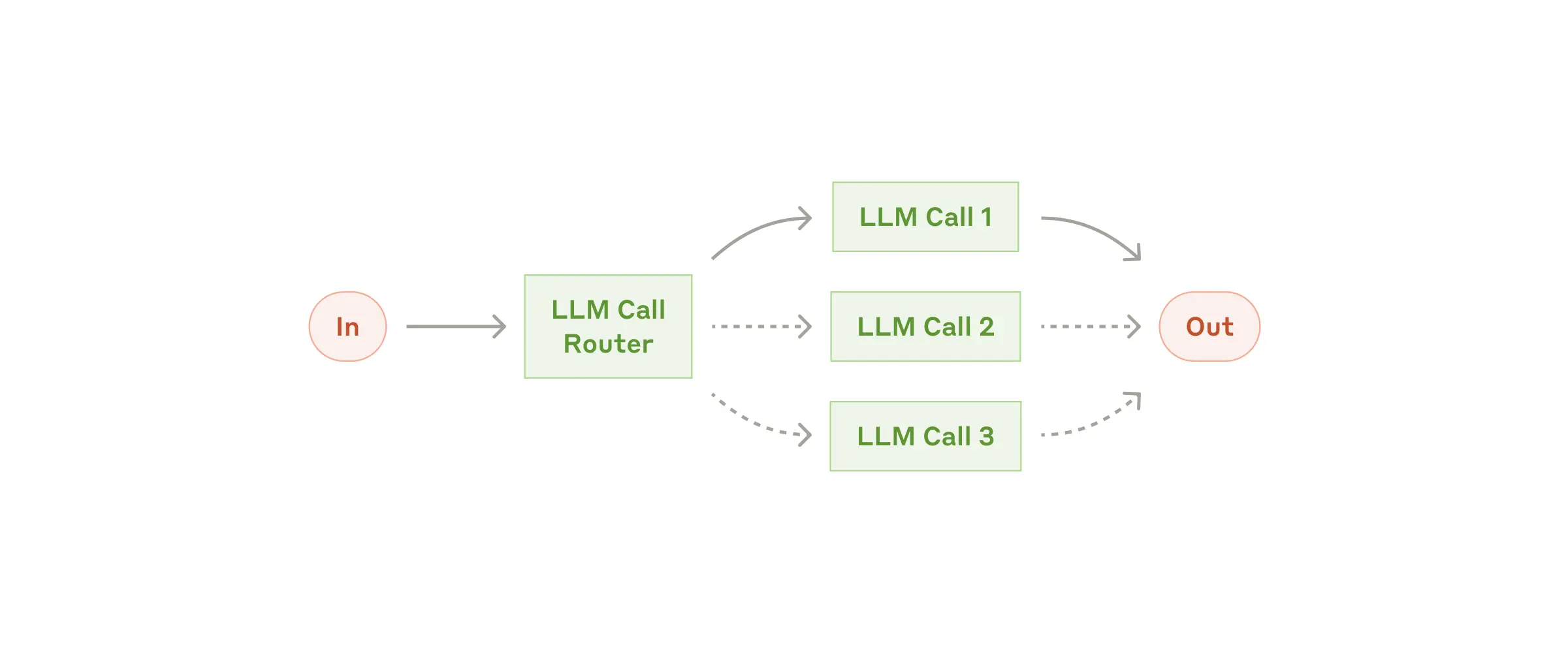

What I particularly appreciate about Anthropic's analysis is their breakdown of common patterns:

- Augmented LLMs (basic building block)

- Prompt chaining

- Routing

- Parallelization

- Orchestrator-workers

- Evaluator-optimizer

While I haven't personally implemented all of these patterns yet, I'm excited to experiment with them in future projects. The orchestrator-workers pattern, in particular, seems promising for some complex data processing tasks I'm working on.

When to Use Agents (And When Not To)

Here's what I've learned about when to deploy agents:

- Use them when: You need flexibility and model-driven decision-making at scale

- Skip them when: A single well-prompted LLM call with retrieval would suffice

The key is to resist the temptation to over-engineer. I've seen projects where a simple prompt with good context would have worked better than a complex agent system.

The Tools Question

One aspect that resonated strongly with me was their emphasis on tool design. In my work, I've found that spending time on clear tool documentation and testing is crucial. It's better to have a few well-designed tools than many poorly documented ones.

Looking Forward

I'm planning to experiment with some of these patterns in my upcoming projects, particularly the evaluator-optimizer workflow for some data analysis tasks. I'll be sure to share my findings in future posts about what works and what doesn't in real-world applications.

My Take

While Anthropic's article provides an excellent theoretical framework, my practical experience confirms their core message: start simple. Modern language models are incredibly capable on their own, and often the best approach is to let them do their thing without too much architectural overhead.

The key is to understand when additional complexity adds value and when it's just adding potential points of failure. In most cases, I've found that a well-crafted prompt and thoughtful system design will outperform a complex agent architecture.